Request a demo specialized to your need.

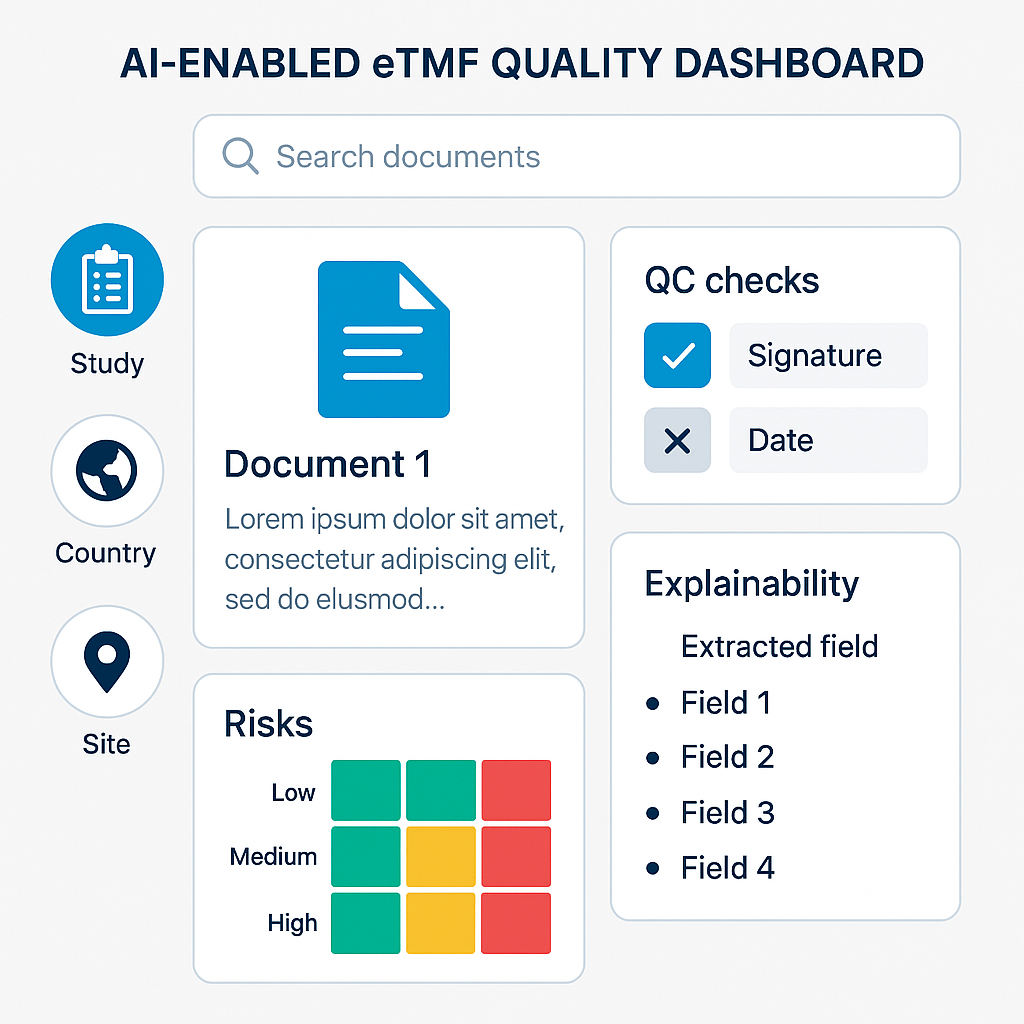

Practical, explainable GenAI that speeds eTMF work without risk.

Clinical trial teams are drowning in documents while regulators are raising the bar on reconstructability. The eTMF was supposed to be the cure: standard taxonomy, controlled access, faster inspection readiness. Instead, many programs have turned the eTMF into a high-latency bureaucracy: documents arrive late, metadata is inconsistent, and quality control (QC) becomes a grind of checkbox validation rather than evidence of trial integrity.

The core failure is not technology. It’s operating model. Most organizations still treat the eTMF as a library (“file everything correctly”) rather than a decision system (“prove what happened, when, by whom, and under what controls”). That mindset produces predictable pathologies: teams optimize for completeness percentages, overproduce artifacts “just in case,” and push QC downstream until it becomes a crisis. Meanwhile, inspection reality doesn’t care about your dashboard. It cares whether the trial can be reconstructed with credible, contemporaneous evidence.

The pressure is intensifying. EU GCP inspections continue to surface large volumes of deficiencies; the EMA GCP Inspectors’ Working Group reported 720 deficiencies across 67 inspections in 2023 (an average of 11 findings per site), including 36 critical and 336 major. That’s not a documentation footnote; it’s a systemic signal. And when inspection findings are analyzed across agencies, documentation repeatedly tops the list: in one comparative analysis of FDA and EMA inspection findings, documentation accounted for 46% of EMA clinical investigator findings and 45% of EMA sponsor/CRO findings. This is the environment in which GenAI is being pitched as an eTMF “copilot.” If we deploy it as a faster librarian, we’ll get faster chaos. If we deploy it as a safer evidence engine, we can change the game.

The uncomfortable truth: your eTMF is not inspection-ready because it’s not query-ready

The industry’s most repeated “best practice” is simple: maximize completeness. Adopt the TMF Reference Model, enforce filing rules, run QC checklists, drive completeness to 95–100%. This approach is comforting because it’s measurable. It’s also becoming ineffective.

Why? Because completeness is a proxy metric that fails under modern trial complexity. Start-up alone is document-heavy; one industry analysis notes that 53% of artifacts that end up in the TMF are associated with study start-up. That’s before the trial even hits operational stride. Completeness-driven programs respond by scaling manual QC and escalating bureaucracy. The result is predictable: QC becomes the bottleneck, not quality. Teams spend time validating metadata fields while high-risk evidentiary gaps remain hidden—like missing delegation-of-authority traceability, inconsistent training evidence, or ambiguous oversight records (the kinds of things regulators actually probe).

Even worse, completeness thinking pushes organizations to “QC everything equally.” In a world of constrained resources, equal treatment is not fairness—it’s negligence. Some artifacts are low-risk; others are pivotal to subject protection, data integrity, and oversight. A single missing or inconsistent document trail can matter more than 500 perfectly filed routine items.

Now add business pressure. Delays are expensive, and the opportunity cost is no longer abstract. Tufts CSDD’s updated estimates suggest a single day of delay equals approximately $500,000 in lost prescription drug/biologic sales, and direct daily trial costs around $40,000 per day for Phase II–III trials. When QC becomes a throughput tax, it quietly becomes a value leak.

So here’s the contrarian position:

Stop treating completeness as the goal. Treat answerability as the goal.

If your eTMF cannot answer inspection-grade questions quickly—with traceable evidence—you are not ready, regardless of your completeness score.

Where GenAI actually helps: three capabilities, one principle

GenAI brings real value to eTMF only when it is constrained by a single principle:

No claim without evidence.

If the model can’t point to the source excerpt, the document version, the timestamp, and the role-based access context, it shouldn’t be allowed to “sound right.”

When engineered with that principle, GenAI unlocks three high-impact capabilities:

1) Summaries that reduce cognitive load without inventing facts

The first legitimate GenAI win in eTMF is not “autofiling.” It’s inspection-grade summarization: concise, structured summaries of essential documents (e.g., monitoring visit reports, CAPAs, vendor oversight evidence, training records) that explicitly cite the source artifacts and highlight deltas across versions. This matters because most TMF failures are not about missing documents alone—they’re about missing coherence: inconsistencies, gaps, and weak traceability across the story of the trial.

2) Natural-language queries that behave like cross-functional experts

A mature GenAI eTMF layer enables users to ask:

-

“Show me all sites where delegation logs were updated after first IP shipment.”

-

“Which monitoring visit reports mention informed consent deviations, and what CAPA evidence is filed?”

-

“Where do vendor oversight reports conflict with issue escalation logs?”

Done correctly, this is not keyword search. It’s semantic retrieval + controlled reasoning, grounded in your TMF corpus with strict permissioning and auditable outputs.

3) Safer QC that targets risk, not volume

GenAI becomes transformative when QC shifts from “review every field” to “hunt the few things that can sink you.” That means risk-based QC powered by anomaly detection, inconsistency checks, and cross-document validation. The model flags what humans are bad at spotting across thousands of documents: temporal contradictions, missing prerequisite evidence, suspicious boilerplate reuse, inconsistent site identifiers, or oversight gaps across vendors.

This is how GenAI reduces QC cost and increases QC effectiveness—if you let go of the myth that QC equals manual review.

A step-by-step framework: the SQRC model (Summarize → Query → Risk-score → Confirm)

Most GenAI eTMF initiatives fail because they start with models and end with disappointment. Start with the workflow. Here is a practical framework that is both modern and defensible:

Step 1: Define “inspection questions” as first-class requirements

Build an “inspection question bank” that mirrors how inspectors think: reconstructability, oversight, training, deviations, safety reporting, vendor controls, data integrity, and computerized system compliance. Convert each question into:

-

required evidence artifacts,

-

acceptable alternatives,

-

timing rules (what must exist before what),

-

and ownership (who is accountable).

This flips eTMF from storage to proof system.

Step 2: Enforce evidence-grounded summarization (with citations and deltas)

Every summary must include:

-

citations to exact document sections,

-

version diffs (“what changed vs v2?”),

-

and an explicit uncertainty indicator when evidence is ambiguous.

If the model cannot cite, it must abstain. This is how you prevent “confident nonsense” from entering regulated workflows.

Step 3: Create a semantic evidence graph, not just a search index

Indexing documents is not enough. You need relationships:

-

site ↔ subject ↔ consent ↔ deviation ↔ CAPA ↔ monitoring ↔ oversight

-

vendor ↔ deliverable ↔ quality event ↔ escalation ↔ resolution

-

training ↔ role ↔ delegation ↔ task execution

This evidence graph is the backbone that makes queries reliable and QC automation meaningful.

Step 4: Shift QC from checklisting to risk scoring

Assign risk weights by artifact class and context. For example:

-

High risk: informed consent evidence, safety reporting traceability, oversight records, protocol deviation documentation, computerized system validation evidence.

-

Medium risk: monitoring narrative coherence, vendor deliverables, training/delegation consistency.

-

Low risk: routine administrative items with minimal inspection relevance.

Then deploy GenAI to generate risk signals, such as:

-

missing prerequisites (e.g., training evidence missing before delegated activity),

-

temporal anomalies (dates that don’t make operational sense),

-

cross-document contradictions (monitoring narrative vs issue logs),

-

suspicious uniformity (templated text reused across sites without site-specific data).

Humans review the exceptions, not the universe.

Step 5: Implement “QC with receipts” (audit-ready outputs)

Every GenAI QC flag must produce:

-

the evidence excerpt(s),

-

the rule triggered,

-

the confidence score,

-

the user who accepted/overrode the flag,

-

and the reason for override.

This is the difference between “AI suggestions” and a defensible quality system.

Step 6: Lock down model behavior with guardrails that regulators will respect

At minimum:

-

retrieval restricted to authorized content (role, study, country),

-

prompt and response logging for audit,

-

approved model/version registry,

-

redaction controls for sensitive content,

-

and drift monitoring with periodic re-validation.

This is not optional if GenAI touches regulated evidence.

Step 7: Measure success by answerability and risk reduction, not completeness

Replace vanity metrics with operational truth:

-

time-to-answer top inspection questions,

-

number of high-risk gaps detected pre-inspection,

-

cycle time reduction for QC queues,

-

and reduction in late filing of essential documents.

Completeness can remain a hygiene metric. It should not be your north star.

The provocative conclusion: GenAI won’t fix your eTMF. It will expose it.

GenAI is a mirror. If your TMF operations are inconsistent, your metadata is unreliable, and your oversight story is fragmented, GenAI will not magically create quality. It will surface contradictions faster. That’s a feature, not a bug—if you’re willing to act on what it reveals.

The organizations that win will stop idolizing “perfect filing” and start building provable trial narratives. They will demand that every summary is sourced, every query is auditable, and every QC decision is traceable. In a world where documentation findings are consistently among the most common inspection issues and inspections continue to yield substantial deficiencies , the competitive advantage is not a prettier dashboard. It is an eTMF that can answer hard questions quickly, safely, and with receipts—before an inspector asks them.

If you adopt GenAI with the SQRC model, you don’t just accelerate TMF work. You change what “TMF quality” actually means: from a warehouse of artifacts to a system that proves, on demand, that the trial was run with control.

Subscribe to our Newsletter