Request a demo specialized to your need.

Standardize eTMF metadata so AI, QC, and audits work reliably.

As clinical operations embrace artificial intelligence and automation, many organizations discover an uncomfortable truth: their eTMF is not AI-ready—not because of weak algorithms, but because of weak metadata governance. Metadata is the backbone of document quality, discoverability, system interoperability, and inspection readiness. Without clear definitions, ownership, and enforcement, even the most advanced eTMF platforms devolve into inconsistent artifact naming, missing attributes, and manual reconciliation across CTMS, EDC, and regulatory workflows.

Thought leaders in clinical quality are reframing metadata governance not as a configuration task, but as a scalable operating model—one that enables AI, reduces risk, and sustains inspection readiness by design.

Defining Canonical eTMF Metadata That Scales

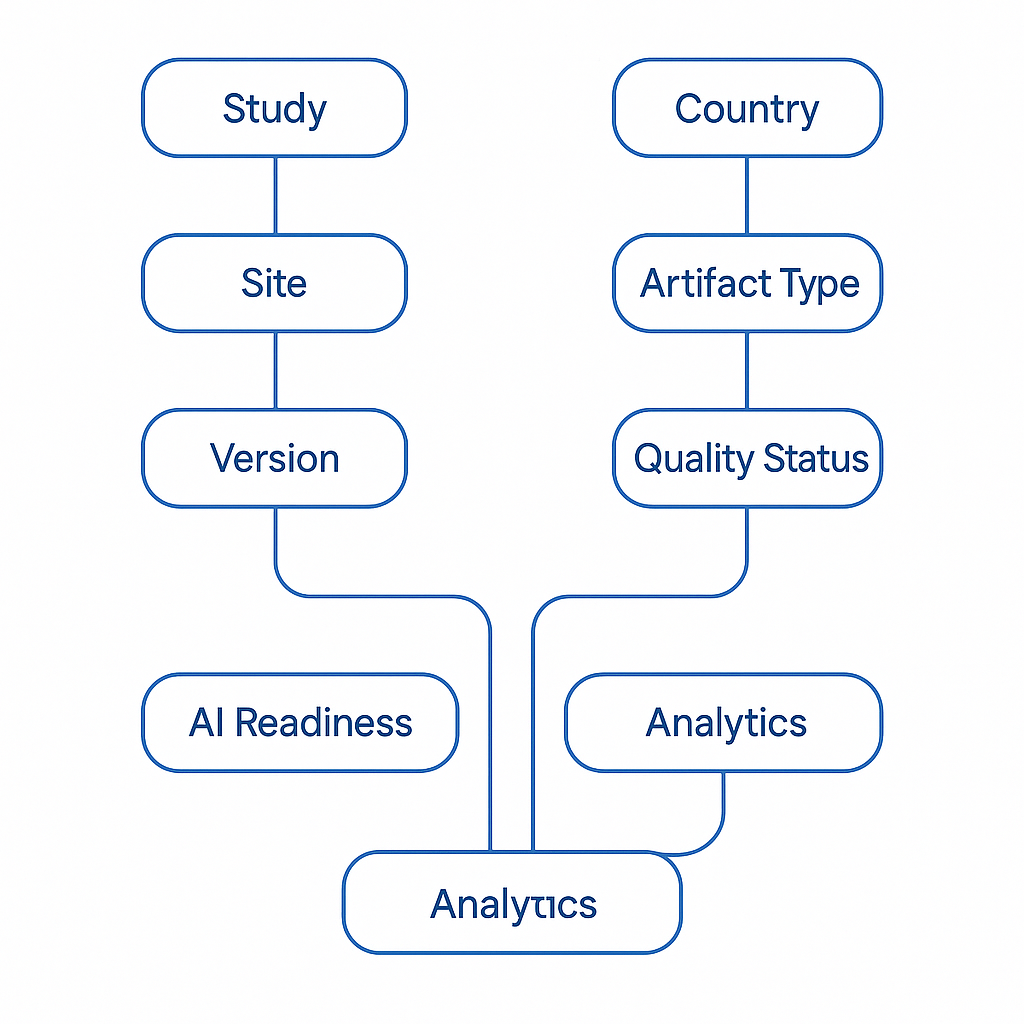

AI-ready eTMF operations begin with a canonical metadata schema—a single, authoritative definition of what every artifact must carry, regardless of study, geography, or system of origin. At a minimum, this schema should include:

-

Study identifier

-

Country and site

-

Artifact type and sub-classification

-

Version and effective date

-

Author and functional owner

-

Signer (where applicable)

-

Lifecycle status

-

Linkage to upstream operational events (for example, site activation or protocol amendment)

These definitions must be written in plain, operational language, not technical jargon, and explicitly mapped to real workflows. Metadata should be populated by design—at the moment documents are created or triggered—rather than retrofitted during quality review. When definitions are intuitive and embedded into processes, consistency becomes the default outcome.

Alignment with industry standards such as the TMF Reference Model helps reduce ambiguity and accelerates adoption. Many sponsors and CROs successfully use it as a baseline taxonomy, extending it only where justified by study complexity or regulatory need.

Clear Ownership: Turning Metadata Into an Asset

Metadata quality degrades fastest when ownership is implicit. Scalable governance requires explicit accountability by domain:

-

Clinical Operations owns study, country, and site context

-

Regulatory owns approval and submission attributes

-

Quality Assurance owns QC status and inspection readiness indicators

-

Finance owns payment-relevant flags such as milestone release readiness

Publishing a clear RACI eliminates uncertainty over who may create, edit, review, or approve each field. Equally important is defining creation paths. When a site is added in CTMS, eTMF placeholders with pre-filled metadata should be generated automatically. When a protocol amendment is approved, new version placeholders with effective-dated applicability should be created without manual intervention.

When ownership is explicit, AI and automation can safely fill gaps—without undermining control.

Risk-Based Governance Aligned to Modern GCP Thinking

Governance should not be uniform; it should be proportional to risk. The updated ICH E6(R3) reframes GCP around critical-to-quality (CTQ) principles and targeted oversight. Metadata governance must reflect this shift.

Artifacts linked to CTQ outcomes—such as informed consent forms, safety reports, and ethics approvals—should carry higher-assurance controls: stricter validations, dual review, and tighter lifecycle constraints. Lower-risk artifacts can follow lighter governance paths without compromising compliance.

By binding metadata rules to CTQ risk, organizations enable AI to operate confidently in low-risk areas while preserving human oversight where it matters most.

Harmonizing Identifiers, Versions, and System Integrations

Clean eTMF metadata cannot exist in isolation. It depends on harmonization across CTMS, EDC, and other clinical systems. Study IDs, country and site codes, visit dictionaries, milestone definitions, and amendment identifiers must match across platforms.

Integration boundaries should enforce referential integrity: unknown identifiers are rejected, exceptions are routed with actionable reason codes, and every transaction is auditable. Versioning must include effective dates and applicability windows so historical records remain explainable by cohort, site, and activation timing.

Event-driven integrations are essential. When CTMS marks a site as “ready to activate,” that event should update eTMF expectations and trigger quality gates. When EDC records first patient in, the system should verify that consent artifacts are present and current. Resilient design patterns—queues, idempotent processing, and correlation IDs—ensure traceability from operational trigger to filed artifact.

Regulators expect validated, secure, and traceable systems. Guidance such as the European Medicines Agency principles on computerized systems underscores the importance of data integrity, auditability, and explainability across electronic trial records.

Measuring Quality and Enabling AI—Responsibly

Governance becomes real when it is measured continuously. Leading organizations track a focused set of KPIs:

-

Metadata completeness by field and artifact family

-

First-pass QC success rate

-

Duplicate or misfile rate

-

Exception aging by root cause

-

Audit-trail completeness

Role-based dashboards ensure that study teams, QA, and country leads see the same truth and can self-correct early.

With disciplined metadata in place, AI becomes both practical and defensible. Machine learning can classify artifacts, pre-fill metadata from document content, and detect anomalies such as version conflicts or misfilings. But explainability is mandatory. AI outputs must show which terms, fields, or rules drove a recommendation, and humans must remain in the loop for CTQ artifacts.

Model drift monitoring, governed retraining datasets, and documented intended use are no longer optional—especially when AI outputs may be referenced during inspections.

Metadata Governance as a Product, Not a Project

The most advanced organizations treat metadata governance as a product: owned, versioned, measured, and continuously improved. Changes to templates or dictionaries are piloted, documented, and communicated. Mismatches between CTMS readiness and eTMF completeness are surfaced within hours, not weeks.

When metadata governance operates at this level of maturity, the eTMF evolves from a passive repository into an active, intelligent system of record—trusted by operations, fertile ground for AI, and inspection-ready by default.

Conclusion: Governing Metadata to Unlock the Future

AI-ready eTMF operations do not begin with algorithms. They begin with canonical metadata, clear ownership, risk-based governance, and harmonized systems. By elevating metadata governance to a strategic discipline, life sciences organizations unlock faster execution, lower compliance risk, and sustainable inspection readiness.

In an era where regulators expect continuous control and AI promises exponential efficiency, metadata is no longer plumbing—it is leadership infrastructure.

Subscribe to our Newsletter